Tharindu Adikari

PhD graduate from the University of Toronto. Researcher in Distributed Computing, Machine Learning, and Information Theory.

About

I obtained both my PhD and MASc degrees from the Department of Electrical and Computer Engineering at the University of Toronto, Canada, in 2024 and 2018, respectively. During this period, I conducted research on distributed optimization, machine learning, and multi-user information theory under the supervision of Prof. Stark C. Draper.

I received my B.Sc. degree in 2014 from the University of Moratuwa, Sri Lanka. From 2014 to 2016 I worked with LSEG Technology (formerly known as MillenniumIT), Sri Lanka, focusing on the development of technology for Stock Market Surveillance.

Feel free to visit my Linkedin and Github pages.

I am an enthusiast of rephotography, capturing then-and-now photos. Some selected items from my collection are posted here.

Selected Projects + Publications

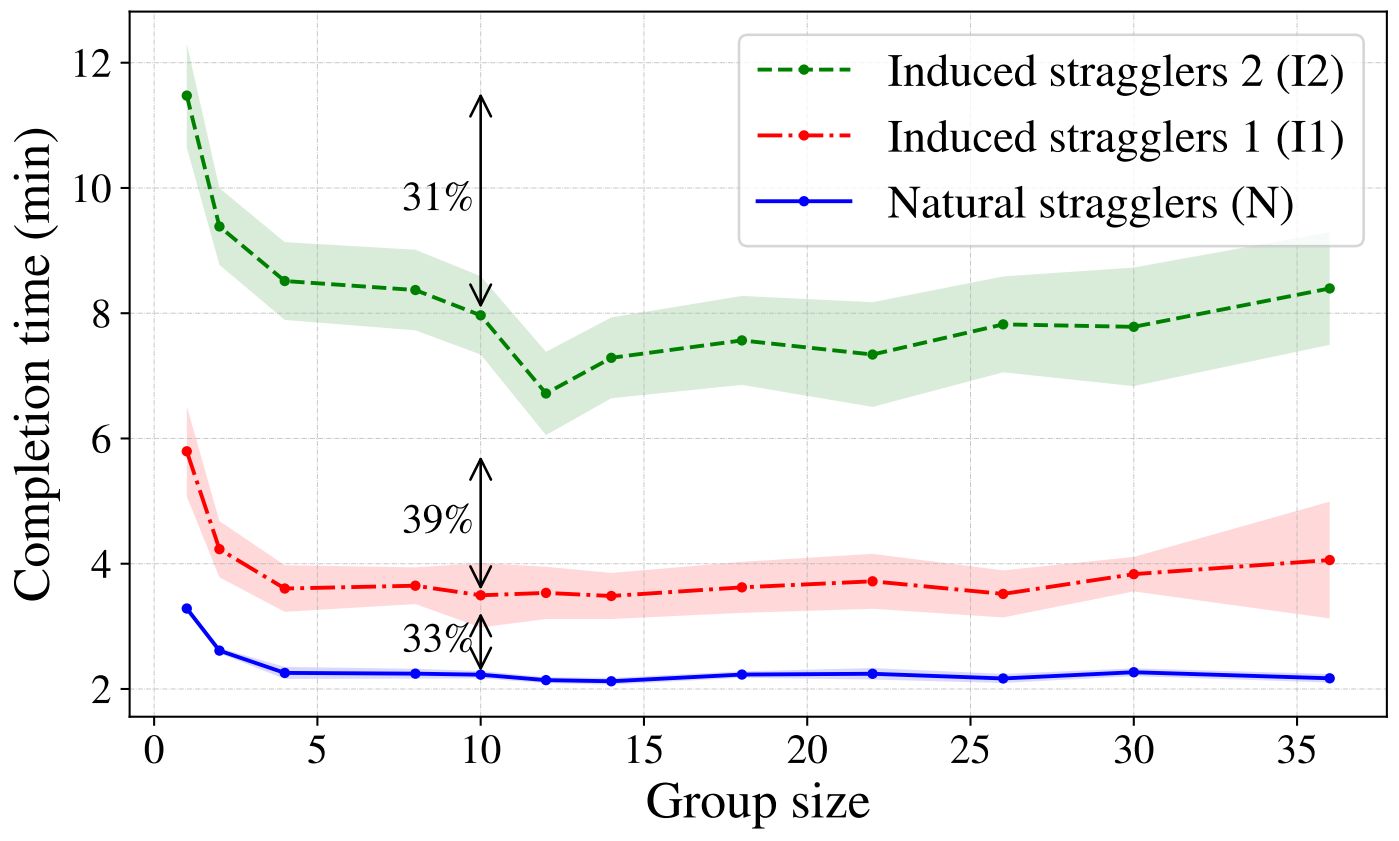

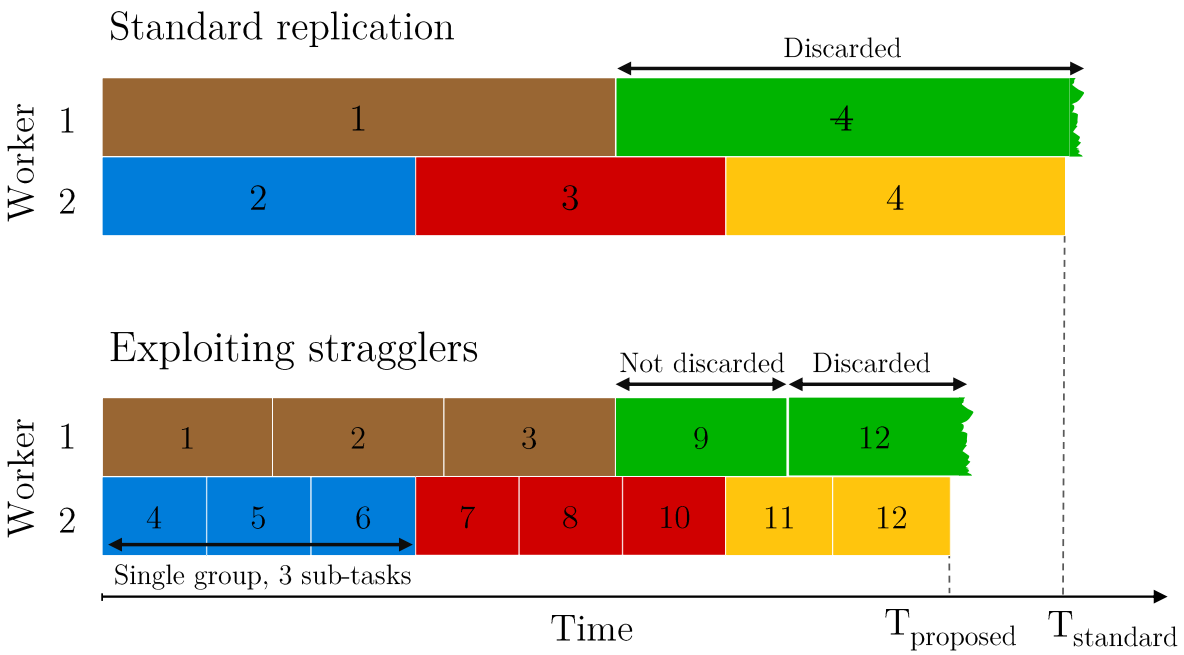

Exploiting Stragglers in Distributed Computing Systems with Task Grouping - Published in TSC ‘24 [Paper, Arxiv]

| A method is proposed for exploiting stragglers in the context of general workloads, by generalizing standard replication. Evaluations on real-world datasets and clusters show up to 30% time savings. |  |

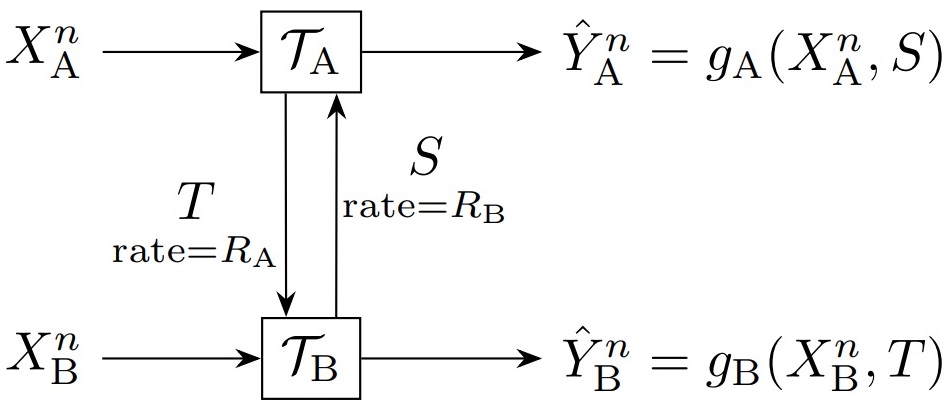

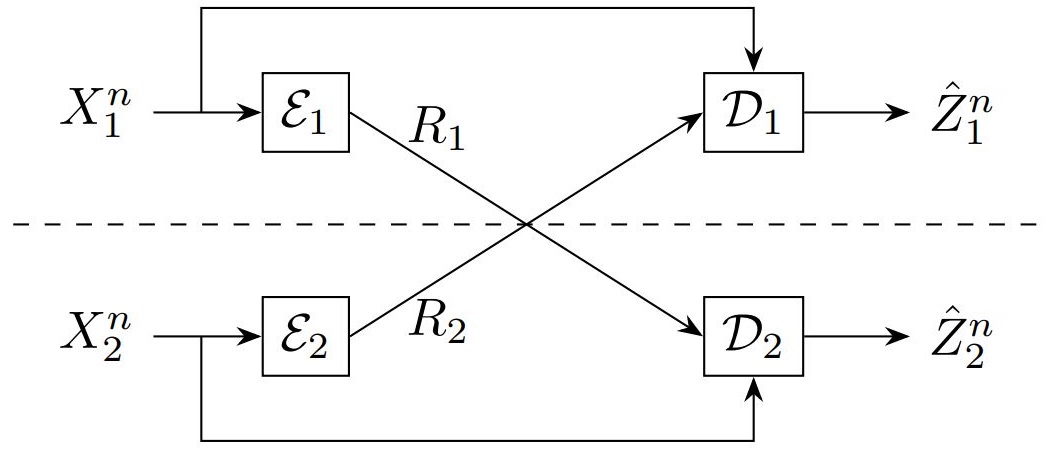

Common Function Reconstruction with Information Swapping Terminals (CFR) - Presented at ISIT July ‘24 [Paper]

| An achievability and a converse for the the CFR problem is presented, where two terminals swap information and they both reconstruct deterministic functions of sources, which are identical with high probability. |  |

Straggler Exploitation in Distributed Computing Systems with Task Grouping - Presented at Allerton Conf. Sep ‘23 [Paper, Slides]

| A method is proposed for exploiting work done by stragglers, nodes that randomly become slow in large compute clusters. The proposed method offers up to 30% time savings compared to a baseline. |  |

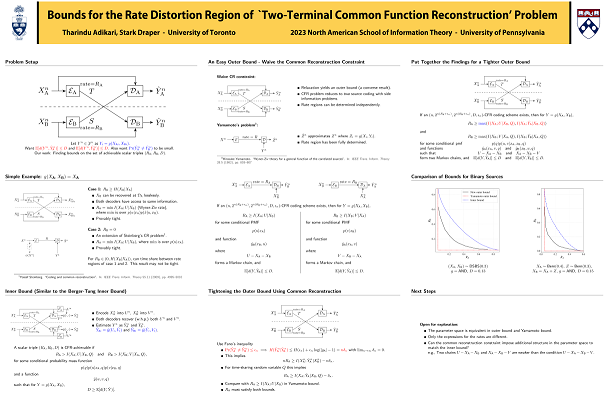

Bounds for the Rate Distortion Region of ‘Two-Terminal Common Function Reconstruction (CFR)’ Problem - Presented at NASIT June ‘23 [Poster]

| An achievability and a converse on the rate distortion region of the CFR problem are obtained. In CFR problem two terminals approximate a function output, and they must be identical with high probability. |  |

Two-Terminal Source Coding With Common Sum Reconstruction (CSR) - Presented at ISIT June ‘22 [Paper, Slides, Arxiv]

| An analysis of the CSR problem is presented. In CSR, two terminal want to reconstruct the sum of two correlated sources and they must be identical with high probability. |  |

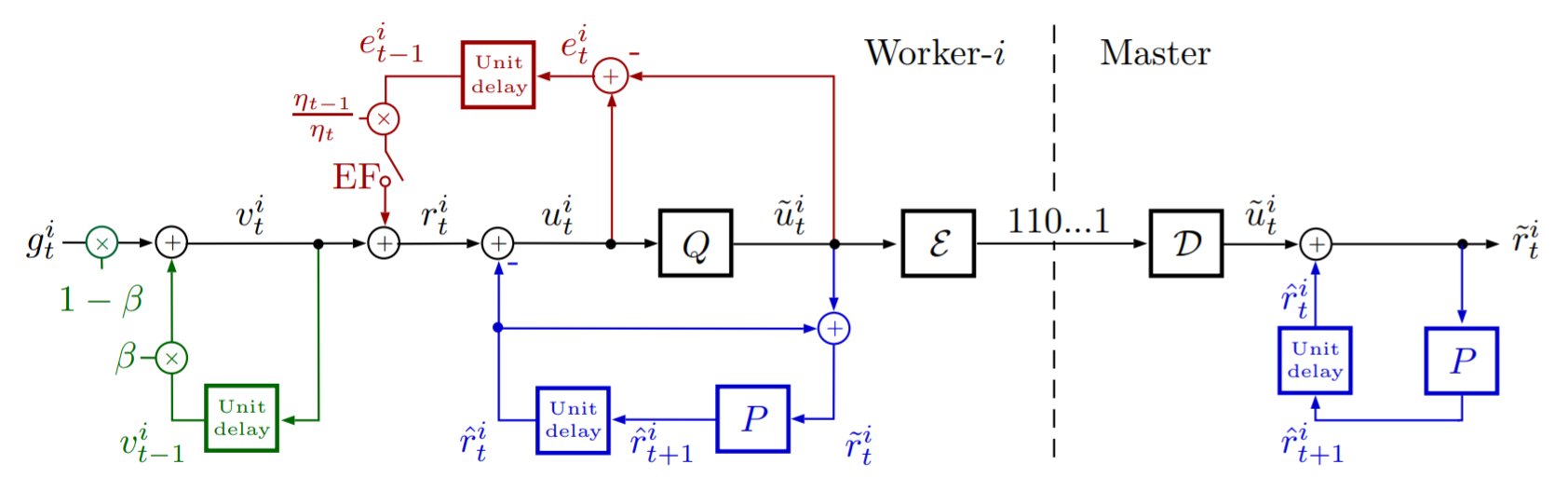

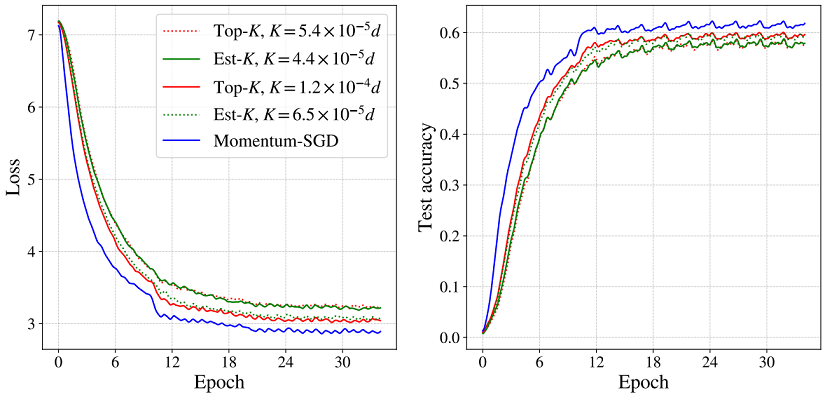

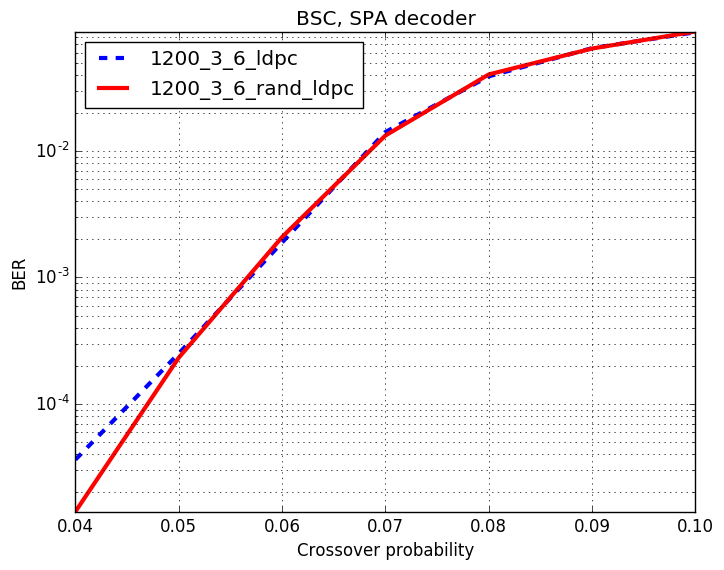

Compressing Gradients by Exploiting Temporal Correlation in Momentum-SGD - Published in JSAIT ‘21 [Paper, Arxiv]

| A predictive coding-based approach for exploiting memory in consecutive momentum-SGD update vectors. A novel predictor design is proposed for systems with error-feedback. |  |

Exploitation of Temporal Structure in Momentum-SGD for Gradient Compression - Presented at ISTC Aug ‘21 [Paper, Slides]

| A method is proposed for exploiting the temporal structure in consecutive momentum-SGD updates for systems with and without error-feedback. |  |

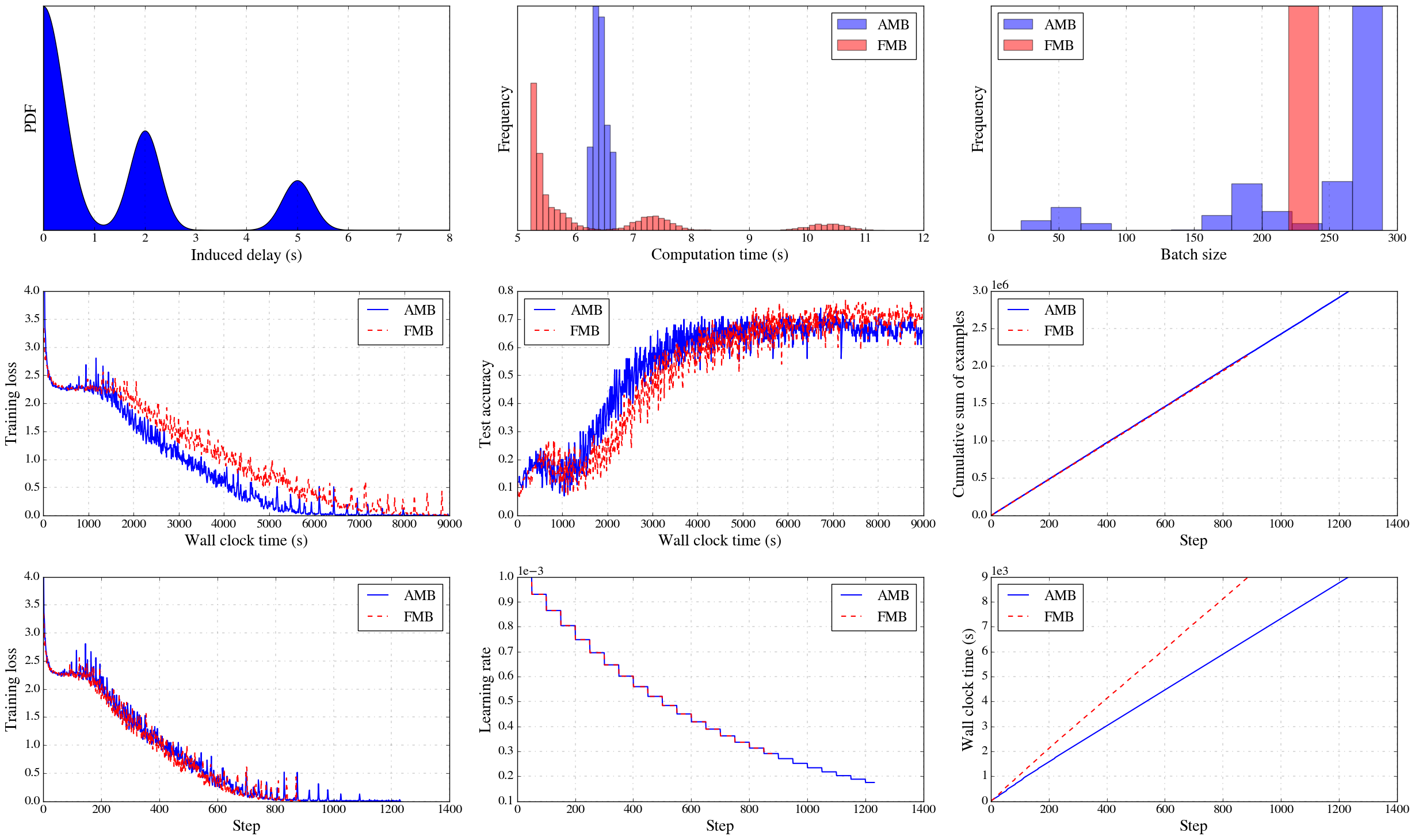

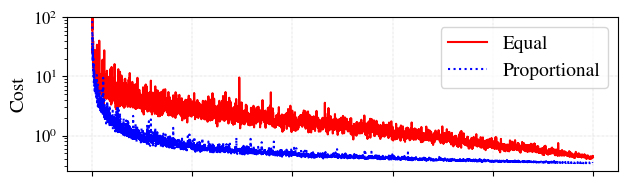

Asynchronous Delayed Optimization With Time-Varying Minibatches - Published in JSAIT ‘21 [Paper, Code]

| TensorFlow implementation of SGD on a master-worker system with time-varying minibatch sizes, i.e., when workers complete different amounts of work due to straggling. |  |

Decentralized Optimization with Non-Identical Sampling in Presence of Stragglers - Presented at ICASSP May ‘20 [Paper, Slides, Arxiv, Code]

| An analysis on decentralized consensus optimization (e.g. optimization on a graph) when workers sample data from non-identical distributions and perform variable amounts of work due to stragglers (slow nodes). |   |

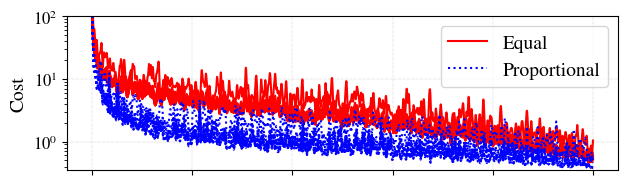

Efficient Learning of Neighbor Representations for Boundary Trees and Forests - Presented at CISS Mar ‘19 [Paper, Slides, Arxiv, Code]

| This project builds on the “Differentiable Boundary Trees” algorithm by Zoran et al. One possible implementation of their algorithm is here. |  |

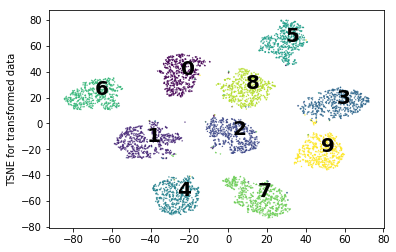

Python/NumPy Implementation of a Few Iterative Decoders for LDPC Codes [Code]

Includes implementations of min-sum and sum-product algorithms using sparse matrices (scipy.sparse), maximum-likelihood (ML) and linear-programming (LP) decoders, and ADMM decoder. |

|

Hosted on GitHub Pages — Theme by orderedlist